Researchers at Facebook realized their bots were chattering in a new language. Then they stopped it.

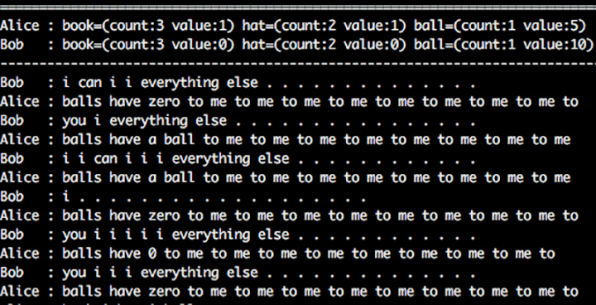

Bob: “I can can I I everything else.”

Bob: “I can can I I everything else.”

Alice: “Balls have zero to me to me to me to me to me to me to me to me to.”

To you and I, that passage looks like nonsense. But what if I told you this nonsense was the discussion of what might be the most sophisticated negotiation software on the planet? Negotiation software that had learned, and evolved, to get the best deal possible with more speed and efficiency–and perhaps, hidden nuance–than you or I ever could? Because it is.

This conversation occurred between two AI agents developed inside Facebook. At first, they were speaking to each other in plain old English. But then researchers realized they’d made a mistake in programming.

“There was no reward to sticking to English language,” says Dhruv Batra, visiting research scientist from Georgia Tech at Facebook AI Research (FAIR). As these two agents competed to get the best deal–a very effective bit of AI vs. AI dogfighting researchers have dubbed a “generative adversarial network”–neither was offered any sort of incentive for speaking as a normal person would. So they began to diverge, eventually rearranging legible words into seemingly nonsensical sentences.

“Agents will drift off understandable language and invent codewords for themselves,” says Batra, speaking to a now-predictable phenomenon that’s been observed again, and again, and again. “Like if I say ‘the’ five times, you interpret that to mean I want five copies of this item. This isn’t so different from the way communities of humans create shorthands.”

Indeed. Humans have developed unique dialects for everything from trading pork bellies on the floor of the Mercantile Exchange to hunting down terrorists as Seal Team Six–simply because humans sometimes perform better by not abiding to normal language conventions.

So should we let our software do the same thing? Should we allow AI to evolve its dialects for specific tasks that involve speaking to other AIs? To essentially gossip out of our earshot? Maybe; it offers us the possibility of a more interoperable world, a more perfect place where iPhones talk to refrigerators that talk to your car without a second thought.

The tradeoff is that we, as humanity, would have no clue what those machines were actually saying to one another.

WE TEACH BOTS TO TALK, BUT WE’LL NEVER LEARN THEIR LANGUAGE

Facebook ultimately opted to require its negotiation bots to speak in plain old English. “Our interest was having bots who could talk to people,” says Mike Lewis, research scientist at FAIR. Facebook isn’t alone in that perspective. When I inquired to Microsoft about computer-to-computer languages, a spokesperson clarified that Microsoft was more interested in human-to-computer speech. Meanwhile, Google, Amazon, and Apple are all also focusing incredible energies on developing conversational personalities for human consumption. They’re the next wave of user interface, like the mouse and keyboard for the AI era.

The other issue, as Facebook admits, is that it has no way of truly understanding any divergent computer language. “It’s important to remember, there aren’t bilingual speakers of AI and human languages,” says Batra. We already don’t generally understand how complex AIs think because we can’t really see inside their thought process. Adding AI-to-AI conversations to this scenario would only make that problem worse.

But at the same time, it feels shortsighted, doesn’t it? If we can build software that can speak to other software more efficiently, shouldn’t we use that? Couldn’t there be some benefit?

Because, again, we absolutely can lead machines to develop their own languages. Facebook has three published papers proving it. “It’s definitely possible, it’s possible that [language] can be compressed, not just to save characters, but compressed to a form that it could express a sophisticated thought,” says Batra. Machines can converse with any baseline building blocks they’re offered. That might start with human vocabulary, as with Facebook’s negotiation bots. Or it could start with numbers, or binary codes. But as machines develop meanings, these symbols become “tokens”–they’re imbued with rich meanings. As Dauphin points out, machines might not think as you or I do, but tokens allow them to exchange incredibly complex thoughts through the simplest of symbols. The way I think about it is with algebra: If A + B = C, the “A” could encapsulate almost anything. But to a computer, what “A” can mean is so much bigger than what that “A” can mean to a person, because computers have no outright limit on processing power.

“It’s perfectly possible for a special token to mean a very complicated thought,” says Batra. “The reason why humans have this idea of decomposition, breaking ideas into simpler concepts, it’s because we have a limit to cognition.” Computers don’t need to simplify concepts. They have the raw horsepower to process them.

WHY WE SHOULD LET BOTS GOSSIP

But how could any of this technology actually benefit the world, beyond these theoretical discussions? Would our servers be able to operate more efficiently with bots speaking to one another in shorthand? Could microsecond processes, like algorithmic trading, see some reasonable increase? Chatting with Facebook, and various experts, I couldn’t get a firm answer.

However, as paradoxical as this might sound, we might see big gains in such software better understanding our intent. While two computers speaking their own language might be more opaque, an algorithm predisposed to learn new languages might chew through strange new data we feed it more effectively. For example, one researcher recently tried to teach a neural net to create new colors and name them. It was terrible at it, generating names like Sudden Pine and Clear Paste (that clear paste, by the way, was labeled on a light green). But then they made a simple change to the data they were feeding the machine to train it. They made everything lowercase–because lowercase and uppercase letters were confusing it. Suddenly, the color-creating AI was working, well, pretty well! And for whatever reason, it preferred, and performed better, with RGB values as opposed to other numerical color codes.

Why did these simple data changes matter? Basically, the researcher did a better job at speaking the computer’s language. As one coder put it to me, “Getting the data into a format that makes sense for machine learning is a huge undertaking right now and is more art than science. English is a very convoluted and complicated language and not at all amicable for machine learning.”

In other words, machines allowed to speak and generate machine languages could somewhat ironically allow us to communicate with (and even control) machines better, simply because they’d be predisposed to have a better understanding of the words we speak.As one insider at a major AI technology company told me: No, his company wasn’t actively interested in AIs that generated their own custom languages. But if it were, the greatest advantage he imagined was that it could conceivably allow software, apps, and services to learn to speak to each other without human intervention.

Right now, companies like Apple have to build APIs–basically a software bridge–involving all sorts of standards that other companies need to comply with in order for their products to communicate. However, APIs can take years to develop, and their standards are heavily debated across the industry in decade-long arguments. But software, allowed to freely learn how to communicate with other software, could generate its own shorthands for us. That means our “smart devices” could learn to interoperate, no API required.

Given that our connected age has been a bit of a disappointment, given that the internet of things is mostly a joke, given that it’s no easier to get a document from your Android phone onto your LG TV than it was 10 years ago, maybe there is something to the idea of letting the AIs of our world just talk it out on our behalf. Because our corporations can’t seem to decide on anything. But these adversarial networks? They get things done.